Artificial intelligence: The researcher ex machina

It was an AI system that caused a wave of excitement in science in the early 2020s. The company DeepMind launched a new version of the deep learning software AlphaFold, which predicts the shape of proteins with unprecedented accuracy: The programme has learned to derive from the sequence of amino acids, which are the building blocks of proteins, how they interact and fold into the complex three-dimensional structure of the protein.

Breakthrough in medicine and biology

This ability is worth its weight in gold for basic research and medicine. Knowing the structure of a protein is actually the first step in understanding its function in the cell. AlphaFold gives researchers a head start, for example in the development of designer proteins for vaccines or cancer therapies. Instead of testing all possible protein variants in the laboratory, the software filters out the best candidates that are then tested. Another possible application: AI can predict how genetic mutations affect a protein, which is a key to understanding diseases such as Alzheimer's.

AlphaFold was trained on hundreds of thousands of already known protein structures deciphered by biochemists in decades of work. Using AI, it is now possible to derive rules from this vast amount of information in order to make precise predictions.

This flagship project shows how researchers use AI to develop new hypotheses and improve methods. Wherever technological progress in science has accumulated large sets of data, AI fuels hopes for breakthroughs in a sufficiently short time. What can technology actually do for research at the moment? And where will the journey take us?

AI: A fuzzy concept

"Artificial intelligence" is not a clearly definable technology. Even if the umbrella term is used in this article, different systems are meant depending on the example. Data analysis methods are based on machine learning. This is an area of research that has given rise to terms such as deep learning and artificial neural networks. Chatbots, on the other hand, are based on so-called large language models. Click here to learn more about AI.

AI in the natural sciences: From the inner life of the cell…

Answers to these questions can be found at the University of Vienna, where researchers are already using the potential of AI in a wide range of disciplines. One of them is Jonas Ries, Head of the Superresolution Microscopy for Structural Cell Biology research group at Max Perutz Labs – named after the Nobel Prize laureate Max Perutz, who first elucidated the protein structure of haemoglobin.

Ries and his team want to capture cellular processes in real time by combining machine learning with innovative microscopy techniques: "These technologies allow us to see cells at nanometre resolution and to observe proteins 'at work'," states the biophysicist.

First, the researchers want to decipher endocytosis: This is the process in which the cell absorbs substances from the outside by collecting them in hollows on the cell surface, packing them into small bubbles and transporting them into the cell. Countless proteins come into action in this important biological process in which nutrients as well as medication or viruses can enter the cell. In order to better understand the mechanism, Ries and his team want to catch the endocytosis proteins 'in flagranti' and observe them in the living cell.

The aim is to create a film from hundreds of thousands of high-resolution microscopy images that shows endocytosis in as much detail as possible. "Machine learning now allows us to shoot ten times more frames per second than before." This enables the research group to document the events in the cell with unprecedented accuracy. "Without AI, we could not process the required amount of data," explains Ries.

More about the research of Jonas Ries

- Video recommendation: Seeing the unseeable - Interview with Max Perutz Labs group leader Jonas Ries

- Jonas Ries on AI in science (German-language video below with English subtitles)

… to the universe in computer simulation

The example of modern microscopy clearly shows that while in the past, a lack of data used to be the problem, today it is often an overabundance. AI raises the hope of processing mountains of data faster to find meaningful patterns in them.

The Euclid space telescope provides astronomical amounts of data. Since 2023, it has observed more than 30 million objects at distances of more than ten billion light years. What can we learn from these images about the laws of nature that determine the origin of the cosmos? Oliver Hahn from the Data Science in Astrophysics and Cosmology research group at the University of Vienna aims to get to the bottom of this question with the help of simulations in the supercomputer. Together with his team, he wants to model the universe: from the formation of the first density fluctuations after the Big Bang to today's distribution of galaxies.

The astrophysicists then compare these simulations with observations from space: Does the computer simulation predict the actual distribution of matter in the universe? Or is the theoretical model incomplete and there are still unknown physical processes? Perhaps they are the missing pieces of the puzzle to understand what happened after the Big Bang and how galaxy clusters formed.

Here, AI comes into play again: "Our simulations require a lot of time and expense," explains the physicist, “Machine learning can simplify the underlying mathematical models." It works like this: "AI provides a replacement model that allows calculations to run faster without losing too much quality. Or you can save on individual simulation steps. Often you sacrifice a bit of accuracy in doing so, but it allows you to achieve your goals faster."

More about the research of Oliver Hahn

The large-scale structure of the cosmos reflects the fundamental laws of astrophysics. Cosmologists are trying to understand them. In a new publication in the journal Physical Review Letters, Oliver Hahn and his colleagues describe a new, improved mathematical approach for fast and consistent simulations of the universe.

AI training as the critical issue

While Jonas Ries and Oliver Hahn are dealing with a huge amount of data that they tackle using AI, there is a lack of high-quality training data in other areas such as medicine. However, these training data are needed to make possible predictions with machine learning, explains Claudia Plant, Professor of Data Mining at the Faculty of Computer Science. "Data that provides the necessary knowledge context is particularly valuable."

For example, imagine an AI system that should distinguish between photos of dogs and cats on the basis of Internet images. During training, each photo must bear the label 'dog' or 'cat'. The AI links this 'knowledge' with the corresponding dog or cat-typical patterns in the image data.

In medicine, you could train the model to recognise subtle differences between images of healthy and diseased tissue. Due to the GDPR, however, labelled data from patients are difficult to obtain. "However, these would be important if we want AI systems to be able to, say, distinguish between Alzheimer's disease and ‘no Alzheimer's disease’ in brain scans. And sometimes we have too little data, because the disease in question is too rare," explains the computer scientist. Plant is currently developing machine learning methods for more efficient therapies against depression.

AI as a black box

But there is another problem: Many AI applications are not transparent. They do not reveal how and why they came to a result. Especially in medicine, this can be problematic. For example, there have already been false classifications of X-ray images of the lungs in an attempt to detect COVID-19 infections using machine learning. The model had learned to distinguish minute differences in the image background instead of clinically relevant features of the tissue. Therefore, it was medically useless. Unless the interpretability and explainability of AI improves, it is of limited use where there is a lot at stake.

That AI can be a black box is not always a problem, says the physicist Oliver Hahn: "It depends on my goal. If the AI system finds a model that accelerates my calculations, I can live with it. But if it gives me a prediction of a particular physics problem, it is important to understand how it made this prediction."

Even if there will be more and more solutions for explainable AI, no researcher will ever be able to rely blindly on it. "Scientists are trained not to trust their data immediately," Jonas Ries points out. For the biophysicist from the Department of Structural and Computational Biology of the University of Vienna, even AI does not change this rule, "In molecular biology, you always have a black box. Each experiment has unknown parameters." Especially in the case of AI results, you have to look closely. "But maybe this can be eventually done by another AI," Ries smiles.

The strengths and weaknesses of the technology therefore depend on the scientific question. The number of fields of research in which AI may be used is increasing. Modern digital technologies open up new possibilities even in areas that we do not normally associate with large data sets.

Save the date: Austrian Computer Science Day 2024

The Austrian Computer Science Day (ACSD) brings together computer scientists from Austria and beyond. The topic of the 2024 edition is "Networks in Artificial Intelligence". Participation is free of charge. Register here.

- When: 14 June 2024

- Where: Faculty of Computer Science of the University of Vienna, Währingerstraße 29, Hörsaal (lecture room) 1.

Ctrl-F for ancient texts

For example, in the Austrian National Library. The partly AI-powered digitisation of more than 3.5 million objects is gradually transforming the vast knowledge archive into bits and bytes. What used to be reserved for people's eyes is now becoming a computer-readable data resource.

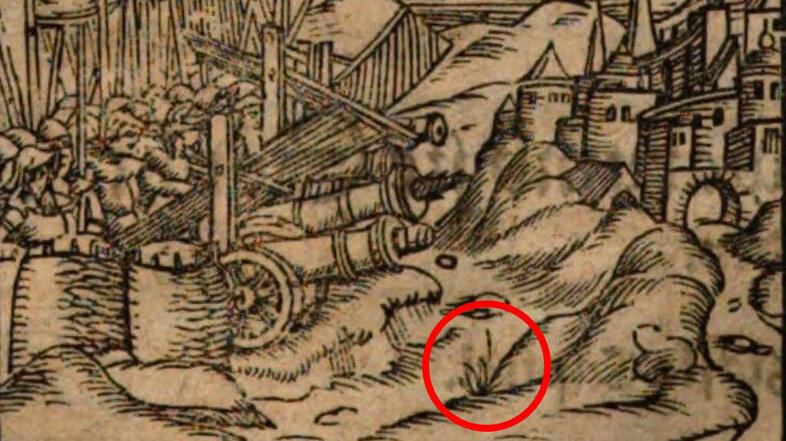

The digital humanities take advantage of this. The field of research between the humanities and computer sciences is the métier of Michela Vignoli, PhD candidate at the Faculty of History and Cultural Studies at the University of Vienna. In her project, she develops AI methods for the evaluation of large collections of texts, so-called corpora. "My research is at the interface between traditional historical studies and data science," explains the historian. She focuses on travel literature about the Ottoman Empire from the years 1501-1850.

The texts show how Western European scholars perceived the local population and natural landscape. "It is about representations of the country and people on pilgrimages or diplomatic trips. We are investigating how these changed over time," says Vignoli. She develops a deep learning model that automatically makes images and text passages searchable for certain depictions of nature – comparable to the search function in a digital document.

More about the research of Michela Vignoli

"Doctor it!" is the podcast of PhD candidates in the humanities and the social sciences at the University of Vienna. In episode 13, Michela Vignoli and Marvin Stecker from the Computational Communication Lab talk about their research and AI in the social sciences and humanities (podcast in English).

Video recommendation: Michela Vignoli - Impact of AI: Gamechanger for Image Classification in Historical Research?

Gamechanger AI?

For historical research, this is a powerful tool that opens up new perspectives. "Until now, the main research focus has been on the text. Being able to include computer-aided image analysis on a large scale will advance our field," says the historian. However, she does not believe that traditional text-based research will become obsolete as a result of increasing automation, "The digital methods should be seen more as a complement and are useful for large data pools. However, it is very important to interpret the sources critically from various points of view." AI automation cannot offer this yet.

AI has not yet fundamentally changed the natural sciences either. "I currently see more gradual change than a sudden revolution," says Oliver Hahn. Jonas Ries echoes this, "While AI makes our method faster and more efficient, we are not yet discovering completely new processes or proteins."

However, for some steps in the process, AI will make a big difference. "A chatbot with access to the academic literature, which facilitates research and the process of summarising and may even make new questions visible, would be a dream come true for every researcher," explains Oliver Hahn. Until then, it is only a matter of time. Already now, AI assistants facilitate writing research proposals and papers. The two scientists agree that programming new algorithms will also become increasingly accessible.

Concerns about AI fakes in science

Science is not immune to the downsides of generative AI. Careless and non-transparent use of chatbots could affect the quality of scientific articles. In the worst case, chatbots are even misused to generate fake data and research articles. Increasingly sophisticated image generators exacberate the problem of fake photos and images, especially in biology and medicine. Watermarks and detection systems could help. Ultimately, however, the reproduction of important results needs to be promoted more strongly in order to detect fraud, Bernd Pulverer of the journal EMBO Reports tells nature.com.

Early training in AI skills

However, text and image generators such as ChatGPT and Midjourney could undermine the credibility of science (see info box) if they are misused for data fakes. Others caution against another so-called 'reproducibility crisis' when researchers use machine learning without knowing its pitfalls. But the historian Michela Vignoli is optimistic that a sensible use of AI is possible, "I think that we will establish a way of using AI tools in compatibility with academic standards. This competence should be trained already during the course of studies."

In addition, computer scientist Claudia Plant believes that the value of interdisciplinary collaboration, especially in AI-enabled projects, should be taught early on. "It is important to understand both the opportunities and the pitfalls of the technology in a project. When researchers work closely with AI experts and data scientists, it fosters a research process that accelerates progress. AlphaFold is a good example of this."

What are the limits of what is possible? Will AI one day move beyond the role of a process accelerator and develop new concepts on its own? "We are just beginning to understand what will be possible," says Oliver Hahn, "but at the moment AI is a tool and not an active force." Even if some AI has already experimented autonomously, it will not be able to function without a human critical thinker who plans, prioritises and interprets the project, say our experts: after all, it is the researchers who ask the questions.

The interpretation and structuring of observational data as well as the discovery of interesting patterns in data sets will increasingly fall to AI.Oliver Hahn

Whenever you have to test a lot of possibilities in a short timespan, a computer can do this much better than a human. AI is already accelerating the life sciences.Claudia Plant

AI is already good at writing programmes. Perhaps it will soon be creating data analysis software for us or developing new algorithms.Jonas Ries

AI will also advance historical studies. It is already facilitating the combined analysis of texts and images.Michela Vignoli

- Website of Oliver Hahn

- Data Science in Astrophysics & Cosmology

- Website of Claudia Plant

- Faculty of Computer Science

- Interview with Claudia Plant

- Website of Jonas Ries

- Department of Structural and Computational Biology

- Website of Michela Vignoli

- ONiT: Ottoman Nature in Travelogues 1501-1850

- Reading recommendation: AI in science - what 1,600 researchers think

- Semester question: Focus on AI in the Rudolphina magazine