GeoAI: Is intelligence spatial?

This semester started by asking, "Do we know what AI will know?" While AI systems do not yet know, they can represent, synthesize, and draw inferences. Interestingly, these steps are deeply geographic or at least spatial, and in the following I will outline why this matters from the perspective of GeoAI research.

What is GeoAI?

As an interdisciplinary field, GeoAI (Geospatial Artificial Intelligence) combines geography, geosciences, cognitive science, and computer science. It studies how AI technologies can address pressing challenges in geography and geosciences (Geo4AI). Additionally, it also explores how spatial thinking and spatial analysis can improve AI's performance in tasks that are not explicitly geographic, thus contributing back to the broader AI community. GeoAI has been applied in disaster response, public health, biodiversity studies, traffic forecasting, crime prediction, and more, but also to uncover and mitigate geographic biases in general AI systems. In the following, we will explore how geographic space shapes what AI knows and why this matters.

The Linguistic Roots of AI

To effectively communicate with us, AI systems need language models and relevant information to discuss. Today's language models are inspired by linguistic theories from the 1950s, specifically distributional semantics, which postulates that "[y]ou shall know a word by the company it keeps." The breakthrough we are witnessing now is less about size alone - although the now ubiquitous Large Language Model (LLM) moniker may suggest otherwise - and more about the ability of these models to better represent the dynamic nature of language where meaning (semantics) emerges during conversation and is context-dependent.

When AI chatbots using these new (LLM) systems were first introduced to the public, their knowledge pool came almost exclusively from the language model's training data. This often led to hallucinations, where chatbots made up likely facts, often with absurd results. Similarly, they could only recall information about the past, i.e., from their training data.

Data not Dreams

Hence, today’s AI use several forms of augmentation. For instance, when a user's question requires solving a numerical problem, such as assessing how population density and real estate demand will change in Vienna by 2030, AI systems first transform the relevant parts into a computer programming language. Next, they run their self-written code, receive the results, and seamlessly include them in the natural language conversation. Consequently, we can now expect an actual population forecast extracted from a reputable source, whereas before, AI chatbots would serve us a made-up number with great self- confidence. This type of augmentation is called retrieval-augmented generation (RAG) because the AI first retrieves relevant information from pre-defined sources.

Knowledge is Spatial and Temporal

What might seem like a technical detail packs a significant punch. The meaning of the terminology used in these data sources, such "water level”, “biotic resistance”, or “immigrant”, is (implicitly) fixed during their creation and remains static afterward. This also includes many other metadata properties that govern the dataset's future reuse. It is this discrepancy between static data semantics and the fluid, context-dependent nature of meaning in human discourse that has plagued data reuse since the very dawn of data and now increasingly challenges AI systems. "Flood risk", for example, in a mortgage dataset, might refer to a narrowly defined long-term probability of significant property damage. However, during a casual conversation, it might mean an immediate danger to someone's home during heavy rain.

Handling the Diversity of Data and Definitions

It gets really interesting when we add a spatial and temporal component. So far, we have conveniently ignored the cultural and environmental backdrop on which a discussion occurs or data are created. We have slowly come to expect that terms such as freedom fighter, gender, and migration vary significantly across time and geographic spaces, e.g., across nations. However, the same holds for many seemingly more objective terms and even scientific terminology. For example, there are several hundred different and often legally binding definitions of "forest" or "city." This variability makes studying many of society's most pressing questions, such as climate-induced migration, extremely challenging. Human data scientists spend up to 80% of a project's resources to address such data challenges in a process called data wrangling, namely, getting data into shape. AI cannot do this, yet.

Currently, one of the most prominent candidates for making data more AI-ready is knowledge graphs. In contrast to typical data infrastructures that merely handle access to data, these graphs are large-scale structures in which billions of individual entities from across highly heterogeneous sources describing places, people, objects, and events are densely interlinked in a human and machine-readable form. Our KnowWhereGraph, for instance, is such a graph and consists of over 40 billion statements (facts, so to speak) across more than 30 interlinked data layers, ranging from health and population data, infrastructure, places, climate variables, crops, and soil quality to past extreme events such as landslides, together with their impact assessments.

KnowWhereGraph's ultimate goal is to deliver geographic summaries for any place on Earth within seconds to provide AI with the contextual background knowledge required to answer questions instead of dreaming up results. While many commercial AI systems utilize their own enterprise graphs, others use Wikipedia's sister project Wikidata. These graphs currently provide the data required for retrieval-augmented generation (RAG).

Hence, it should be easy for an AI chatbot to retrieve the number of citizens living in Vienna at a user's request, but it isn't. This surprising answer stems from the fact that there are dozens of competing population figures: government statistics, statistical estimates, third party estimates, (core) city versus metropolitan area statistics, and so forth. Wikipedia alone reports dozens of population counts for Vienna, e.g. depending on the language version. Which data source should an AI prioritize? For now, human machine learning experts take these decisions in the background.

Biased data, biased representation

Yet again, the answer to this question is geographic. It turns out that what we know about places, cultures, people, and events differs in its degree of localness. Put simply, most of the information that is available to LLM and RAG for the United States comes from the US. However, this changes rapidly as we look at the Global South, Asia, and even parts of Europe. For many of these countries, the majority of information, e.g., webpages, also comes from the US, France, and a few others instead of the countries themselves. For our Vienna example, would you trust the population count from the German Wikipedia version more (as it is more likely written by locals) or the English/international version?

Statistics describing the geographic distribution of training images for AI models that generate images from text are even more concerning. For one popular dataset, for instance, only a combined 3% of training images come from China and India. The big question is, does this matter? Our current research indicates that it does. "Geography according to ChatGPT" does not always truthfully reflect local heterogeneity. As we will increasingly learn about the world around us through the eyes of AI systems, it is problematic if an image generator, when prompted to make an image of a mountain, always returns the Appalachians and never the Kilimanjaro.

Both industry and research are aware of these and other biases. However, debiasing is not an objective task especially as it is unclear whether we want AI to show what is versus how we aspire things to be. Google, for instance, recently had to prominently admit to "missing the mark" when its AI system, while trying to increase diversity in its results, also depicted black and Asian Nazi soldiers. Ideally, deciding how LLM should conform to societal goals would be a question of democratic consensus, not closed-door meetings and technology. Otherwise, we risk turning the first "L" from "large" into “lying". For now, answering our initial question "Do we know what AI will know" requires also to ask: "Do we know what the engineers that we trusted with debiasing AI know?”

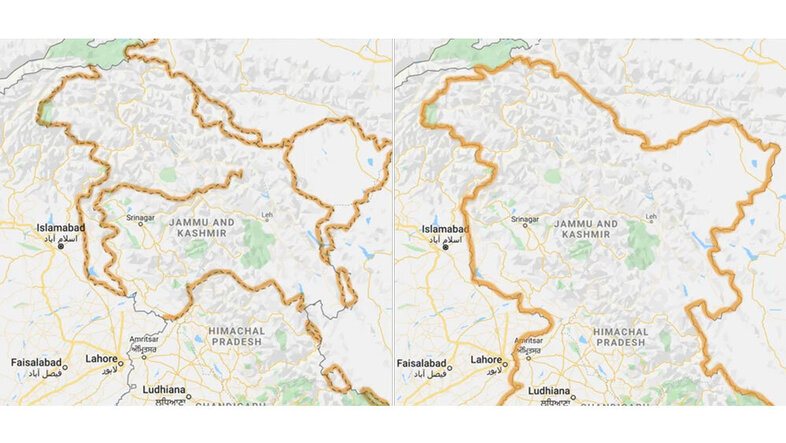

Finally, it is worth asking what truth means in an AI context. By now, you will not be surprised to read that truth also has a spatial and temporal dimension. Whether a statement is true depends on when and even where something was said. Famously, Google returns different maps when looking at the Kashmir region in Google Maps while traveling in India versus in Pakistan, as the borders are contested. Consequently, "facts" about the overall area or population of these countries also depend on where you are when you ask these questions. Which answer do we expect from an AI? Or should there be many local AI? Data about the world, when taken at scale, is not contradiction-free and these contradictions are not data errors but reflect differences in local cultures, customs, and laws.

To know what AI will know we need to understand how AI represents the world around us while acknowledging the geographic, cultural, and temporal contexts that shape the training data it has access to.